In software development, the principles of abstractions and separation of responsibilities and concerns are essential for creating clean, maintainable, scalable, and secure systems. Artificial intelligence (AI) is no exception to these principles. In this essay, I will explore the concepts of AI system (or core) spaces versus application spaces, drawing an analogy from computer operating systems (OS).

In both computer operating systems and artificial intelligence, distinct logical layers separate core low-level functionalities from application-level functionalities and user interactions.

In operating systems, the system space is where the kernel operates, providing core services and maintaining system integrity. Access to this space is typically privileged or restricted, preventing regular users and applications from direct access. The user space, on the other hand, is where applications run, interacting with the system space through system calls (APIs). This separation not only protects the system from potentially harmful user applications but also simplifies the process for developers to write and deploy applications.

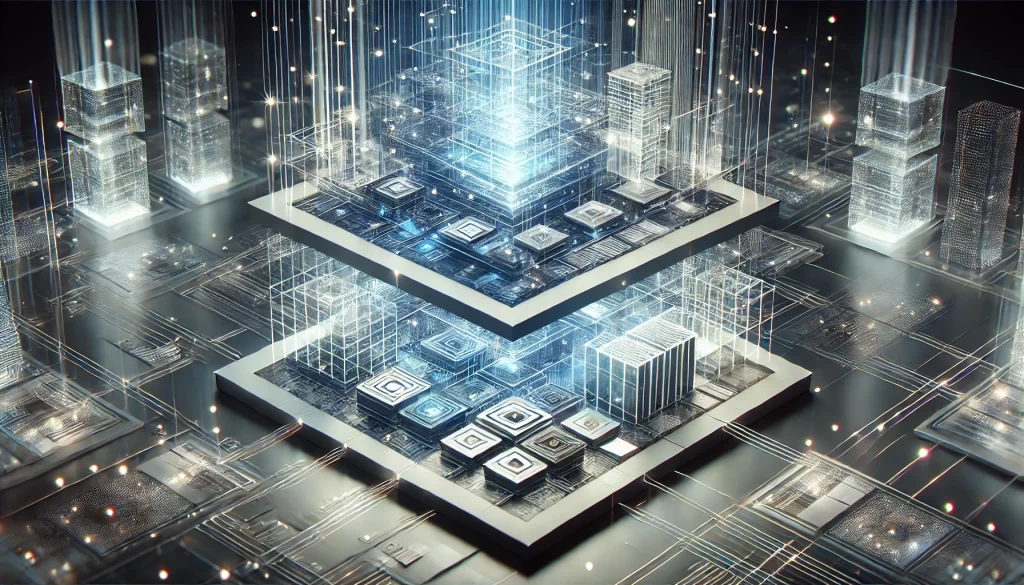

Let’s now look at the AI space and an illustration of this layered view of AI System/Core and Application Spaces.

Figure 1 – AI App and System/Core Spaces or Layers

The AI System (or Core) space is analogous to the system space in an operating system (OS). This space encompasses the core models, algorithms, and infrastructure managed by AI engineers. It handles complex operations such as model training and inference, fine-tuning, optimization, and related infrastructure. In contrast, the AI Application (App) space includes the interfaces, APIs, and tools that enable users and developers to interact with and build applications using AI functionalities without needing to understand the underlying complexities. This separation allows for the creation of valuable applications such as chatbots, intelligent agents, and generative AI apps. This separation ensures that AI app developers and users can leverage AI capabilities safely and effectively without needing to delve into low-level complexities, thereby lowering the barriers to entry.

The following table explores the AI System/Core and Application spaces in more detail.

Table – AI System versus App Space

|

AI System/Core Space (Core AI architectures, models, algorithms, and infrastructure) |

AI App Space (Interfaces, APIs, and tools and Apps for user interaction) |

|

|

Access |

Restricted to AI developers and engineers |

Accessible to end-users and application developers |

|

Functions |

Access to models, model training, fine-tuning, and optimization |

Provides AI-powered features like chatbots, recommendation systems, and voice assistants |

|

Complexity |

Involves complex operations and technical processes |

Simplifies AI functionalities for easy user interaction |

|

Security |

Ensures robustness and security of AI operations |

Designed to be user-friendly and safe for interaction |

|

Purpose |

Focuses on core AI operations and efficiency |

Delivers AI capabilities to users in an accessible manner |

|

Examples |

AI algorithms, architectures, and models. Training a neural network, optimizing algorithms |

Using a chatbot, getting recommendations on a platform |

Next, let’s explore additional examples.

While a large language model (LLM) such as GPT clearly belongs in the AI System/Core space, the placement of Retrieval-Augmented Generation (RAG) might not be as obvious. Let’s explore this further. RAG is a popular technique used to enhance the responses of LLMs by integrating information retrieval systems with these models. AI developers typically work with RAG to extend or augment LLM capabilities.

While we could debate whether RAG serves as a middle-layer or middleware within the application space, in this essay I focus on distinguishing between core low-level complex functionalities and services versus higher-level functionalities and applications. Based on this criterion, RAG belongs to the AI System/Core space due to its intricate integration of information retrieval systems with LLMs to enhance responses by referencing external knowledge bases. This integration involves combining various data sources, potentially through ETL (Extract, Transform, Load) data pipelines, and employing enrichment methods such as semantic enrichments. Additionally, sophisticated algorithms like embedding models, vector search, graph search, or contextual augmentation are used to retrieve accurate and relevant information, which is then merged with the generative capabilities of LLMs.

Once built, the app-specific RAG can be utilized generically by applications within the AI Application space. From this perspective, RAG’s benefits are delivered to users through applications like chatbots and search engines. Developers can access RAG functionalities via APIs, simplifying the integration into user-facing applications.

Table 2 – RAG in the System/Core versus the User/App Space

|

RAG Usage in AI System/Core Space |

RAG Usage in AI App Space |

|

|

Core AI Models |

RAG integrates retrieval systems with LLMs |

Apps use RAG-enhanced models in applications |

|

Algorithms |

RAG uses and implements sophisticated retrieval and generation algorithms, for example Vector or Graph search, Contextual Augmentation, or Hybrid Retrieval |

Apps use RAG via API to augment LLM responses |

|

Infrastructure |

RAG typically requires robust data handling and integration |

Apps use RAG via APIs while hiding the complexity of data handling and data source integrations |

|

AI and Data Governance |

AI and Data Governance policies and guidelines are (or should be) established before information is exposed via RAG |

Apps use RAG without having to implement AI and Data Governance concerns |

In Summary / Final Thoughts

In both operating systems and AI, the distinction between system space (or low-level AI system/core space) and user space (or AI App space) helps maintain clear separation of concerns and responsibilities.

- The low-Level AI System/Core Space manages core functionalities, concealing complexity while ensuring robust performance and safeguarding critical operations.

- The AI App Space is where AI is applied, where users and developers create and utilize AI applications that interact with lower-level AI layers, without needing to understand the underlying complexities.

This analogy is not about oversimplifying the complexities of AI systems, as AI involves a wide range of components and interactions that may not fit neatly into the System space and App space framework. In AI, the boundaries between different layers can be more fluid compared to OS. For example, we could debate about RAG as a middle-layer, or the fact that models are not as tight from the security perspective, and that AI and data governance are very important aspects to consider. As the AI technologies evolve and security and privacy concerns become more apparent, I do expect the practice of App versus System separation becoming more important.

This separation allows for application innovation and usability, ensuring that end-users and application developers can benefit from advanced technologies via proper abstractions, which in turn hides complexity and helps to clearly approach application functionality, security, stability, and efficiency, while simplifying (without compromising) the overall system integrity.

Carlos Enrique Ortiz (2025)